Flame Graph Viewer

The story of profiling

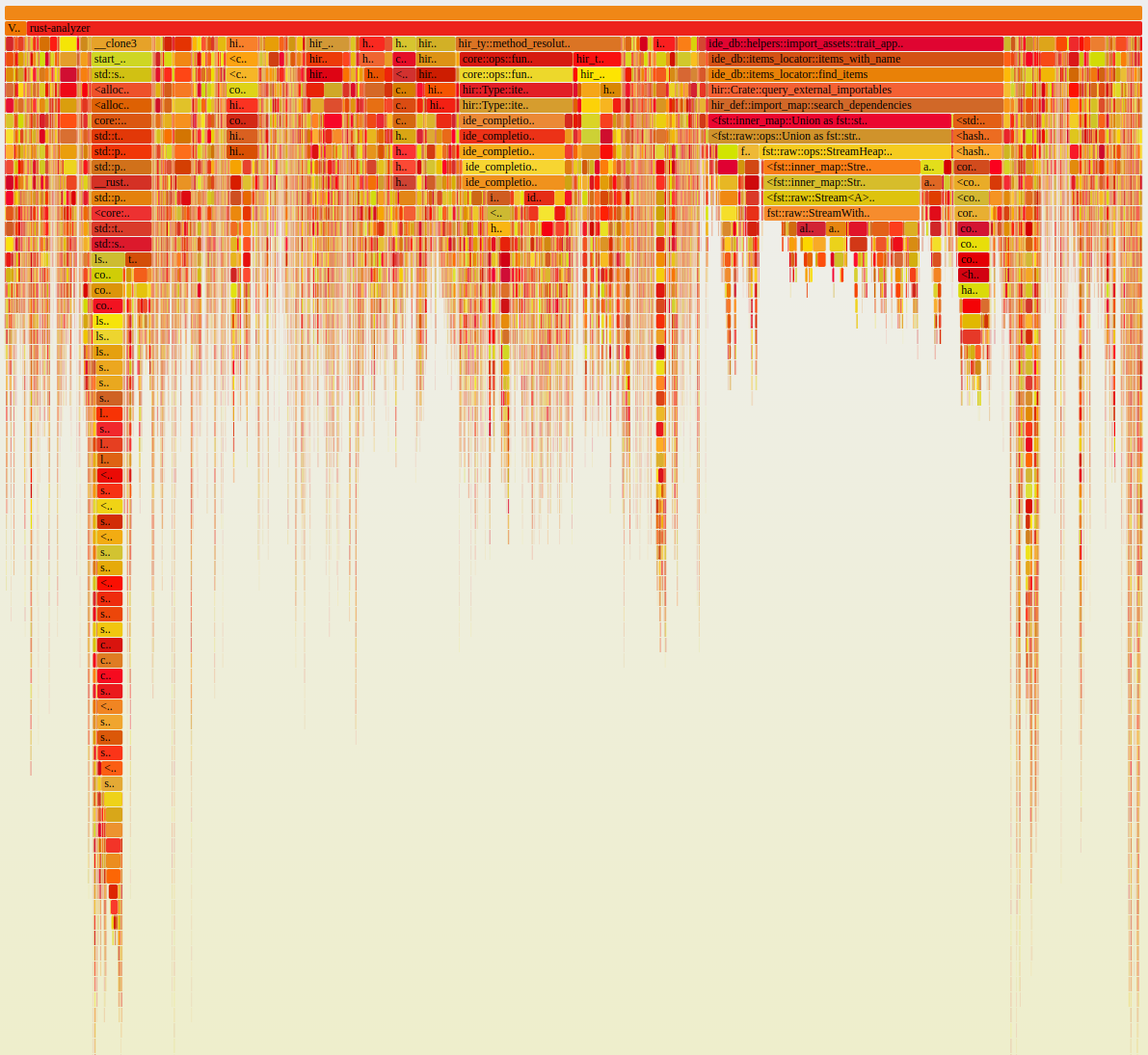

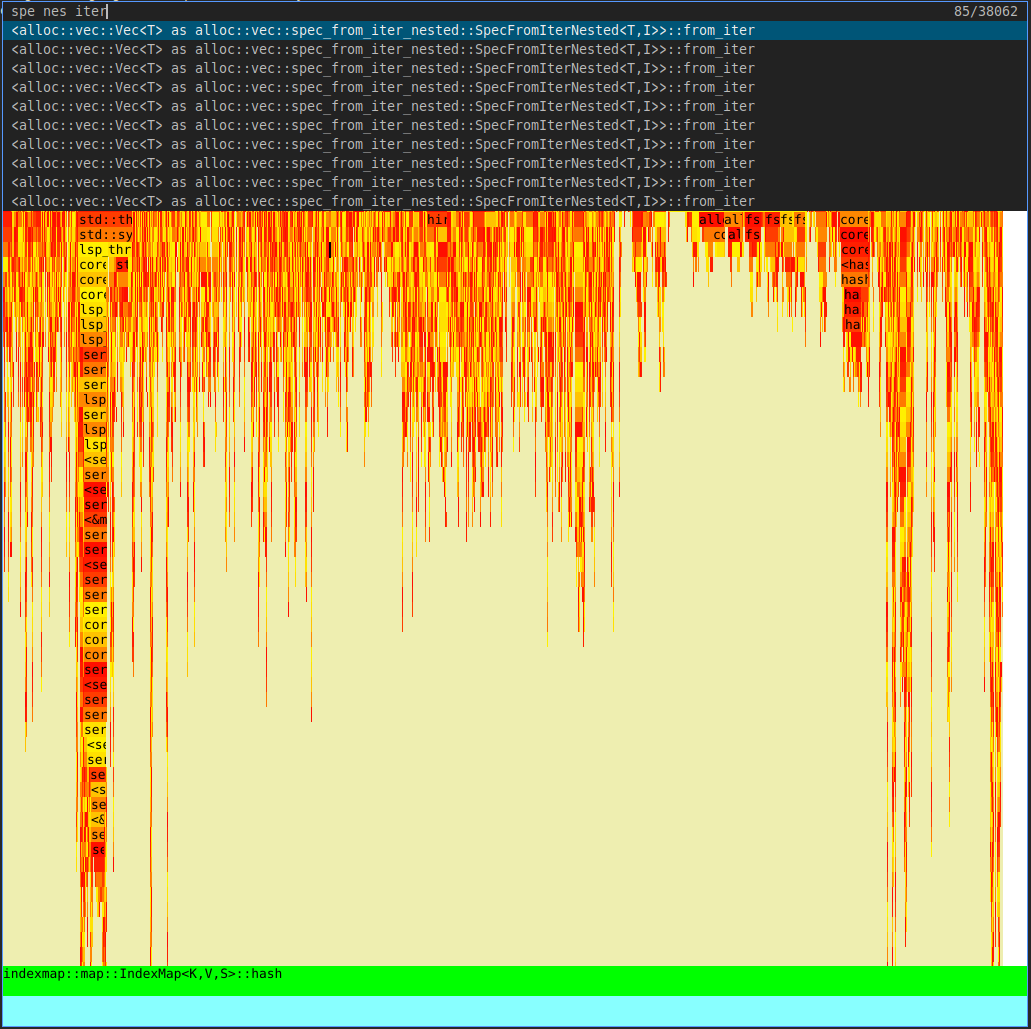

There is something in Rust Analyzer that I would like to fix. This requires understanding its interaction with Chalk. To find the starting point I ran Rust Analyzer with Linux Perf to get the tree of calls represented in a Flame Graph. The Flame Graph was so big, that it was rendered in the browser for quite a few seconds. The hover events were delayed. Nothing happened when I tried to open a frame of the graph. Reading the text representation of the call trees was hard, and I am used to flame graphs. After a little adventure I created my FlameGraphViewer.

The alternatives

I tried to find something fast and native. Saying "native" I mean something which doesn't require a browser. W3C specifications are bigger than POSIX. A modern browser complies with those specifications. I have a strong belief that it's wrong using something big like that to draw an interactive graph. A browser brings thousands of vulnerabilities.

The application Hotspot is written in Qt and C++, but it doesn't render my flame graph fast enough. Another option is https://profiler.firefox.com/, but it doesn't work offline and still requires a browser. My computer has 64GB RAM, 8 Cores each with 3.5 GHz and a gaming graphic card. With this machine I can't draw a graph. I decided to make a viewer of flame graphs. I had been thinking of practicing in GUI application development. Now I have an opportunity to try it.

My picture of a Flame Graph

As the flame graph had a lot of rectangles, my initial idea was to find a GUI toolkit which would allow you to make 1000 buttons. This is because I saw every rectangle as a button. Each rectangle has a caption, border, background, and you can click it. Also, I had a thought in my mind that it shouldn't consume too much memory. I remember playing StarCraft: Brood War on the PC with 32MB RAM. Sometimes there were battles with 800 zerlings on the map. So 1000 buttons should not be a big deal for a modern computer.

The adventure

The adventure has 2 parts: the analysis of software which I can use as the foundation for my application, and the challenges I faced.

Choosing a GUI toolkit and the amount of code

Speaking of the language, I will use Rust as it is currently my favorite language. As I need to draw a window with some controls, I need a GUI toolkit. Which one should I choose? Back in university I had some experience in the field of GUI development. I still have some knowledge in this area. I worked with Qt 4.6 and GTK 2. Additionally, as I used C++, I worked with GTKmm. Previously, I worked with Windows and did some Windows Forms and had some exposure to WinAPI.

Since that time things have changed. I'm happy to see new options for Linux which can be used with Rust. Some of the toolkits are written in Rust. Such as Egui, Slint, Iced. I have played with Egui a little bit. I wanted to make a game and Egui helped me to make the user interface for the game. Also, I remember that someone made a spreadsheet with 1 million cells with Egui. I don't believe that 1000 buttons will be an issue.

I ran the example hello_word from Egui and it consumed of 53MB… of resident memory… in release mode. That was a bit unexpected, because I remember that my university projects consuming about 5MB in release mode and 15MB in debug. Also, I couldn't help but remember that 800 zerlings in StarCraft (with their animations) fit on a 32MB computer and I need just 1000 buttons.

I understand that there are plenty of things implemented in the library. Those nice effects, animations and text rendering with customizable fonts aren't for free. Drawing letters is a quite little list of solved problems. And making a convenient GUI toolkit with a friendly API is a great challenge. Egui does provide a friendly API. But I'm not gonna use most of the things.

Also, I understand that the memory consumption depends on the used data structures.

- The memory allocator can spread some trees or linked lists across the entire address space in some cases.

- The memory can also be used for some caches to reduce disk readings and make the user interface more responsive.

- The memory pages can be poorly aligned. The Rust compiler deals with it sorting the fields, but it's not applied for structures which are decorated with

#[repr(C)].

These issues can arise from the layers of abstractions created to provide a nice API for solving typical, tasks faster. However, my task is not that typical. Including all these nice features will make the user paying for it. I don't want to make users pay for things they don't use. Moreover, I didn't want to delve into the library's source code to understand how to optimize it, because was to create a solution and return to working on Rust Analyzer.

I took GTK 4, because it was something familiar and I was wondering how the memory consumption changed for a Hello World. 83MB. It became interesting how much I should pay for 1000 buttons. 103MB. Following this, I started every GUI toolkit. A check included running a "Hello World" program and running a "Hello World" program with 1000 buttons. I even switched from Rust to C++ to check Qt. The results are below.

| Name | Version | Memory (MB) | Memory with 1000 buttons |

| Egui | 0.21.3 | 53 | 65 |

| GTK 4 | 4 | 83 | 103 |

| Iced | 0.9.0 | 147 | 148 |

| Qt (C++) | 6.5.1 | 71 | 69 |

| FLTK | 1.3.8 | 12 | 46 |

Slint is not in the table as I couldn't find a way to create 1000 buttons programmatically. The Hello World program with one button is 73MB. The version of Slint I had is 1.0.0. FLTK is definitely a winner. The blocker I came across was binding the key Tab and the Shift-Tab combination for lists. It's hardcoded:

static int navkey() { // ... switch (Fl::event_key()) { // ... case FL_Tab: if (!Fl::event_state(FL_SHIFT)) return FL_Right; return FL_Left; // ... } return 0; }

It's not a problem in C++. I could have made a child class from it if I had written the program in C++. I haven't found any way to change it in the Rust binding. This limitation made me think that it's not a good start.

Let's go without a GUI toolkit

After the results of my research, I started thinking that probably removing a GUI toolkit from the stack is not a bad idea. In Linux you can use Xlib to create windows, handle input and draw something. Previously, I had some experience with Xlib. Also, I slightly patched my terminal ST and Dmenu. Both are written with Xlib only. When I was younger, I even tried to make own GUI toolkit in D language being inspired by HermitGUI and DlangUI. Also, I tried to make a markdown viewer. In that viewer, I couldn't make text selection across multiple sections of the text. I couldn't find a way of doing this, because I implemented every section as a window in that viewer. Therefore, every event of mouse click, release and move is assigned to its own window.

The deal with Xlib is that the documentation is like quests in The Elder Scrolls: Morrowind. You gather pieces from the entire world to get a complete picture. The last time I did it, the gathering was hard and fun. Therefore, in order to deal with Xlib I will need to read some code of other projects and probably the source code of Xlib itself. Well, I'm a mature programmer and not afraid of reading large amount of C code.

Apart from making windows, I need to render some text. In my case the rendering is easier because:

- I don't need to support multiple languages. That's because I have never seen a program written in something other than the Roman alphabet (as used in English). Therefore, I don't need to support right to left writing either.

- I need to use only the monospace font. Programmers like to read the code in monospace fonts, because symbols

lI1|look different. Another reason is that a "dot" occupies the same space as a regular symbol. - I don't need formatting, different sizes, line breaks or word wraps.

With this in mind, I took two dependencies: x11-dl for Xlib bindings and RustType to render text.

Unexpected challenges

I created 1000 buttons with Xlib, and it consumed 3.5 MB memory. I was happy to see this number. The application loads instantly. However, I wouldn't have written the article if I hadn't met any challenge.

Unexpected challenges go first. It would have been confusing if I had started with the expected challenges.

Perf format

Linux Perf creates a binary with the recorded events. I expected to read the binary file to avoid using stackcollapse-perf.pl. Reading of the binary file would allow my program to depend only on Linux Perf, but I didn't understand the file format. Luckily, I found this command in the blog of Brendan Gregg (the author of stockcollapse.pl):

perf report --stdio --no-children -n -g folded,0,caller,count -s comm | \ awk '/^ / { comm = $3 } /^[0-9]/ { print comm ";" $2, $1 }' > collapsed.perf

After that, I had a look at the format of the output file and found that an event has one number. In order to understand what the number means, I read the code of flamegraph.pl. The logic was more or less clear apart from this piece:

$Tmp{$k}->{delta} += $i == $len_b ? $d : 0;

As far as I'm aware, I am not the only one who is confused by this. I decided to ignore it for now.

Another aspect I couldn't understand is the value for a perf event can have various formats. In the stackcollapse.pl, you can find this regular expression to extract any variant.

($stack, $samples) = $stack =~ (/^(.*)\s+?(\d+(?:\.\d*)?)$/);

So far, I decided to avoid regular expressions and to process just one integer after the last space.

let split = line.split(char::is_whitespace).rev(); let samples = split.next()?;

Scrolling consumes up to 50% CPU

As I don't have a pre-existing widget for scrolling, I had to create one myself. My initial design of the widget involved making a big canvas and moving a viewport showing only some area of it.

I made a pixmap and a window. The pixmap is the big canvas and the window is the viewport. The pixmap is drawn from a certain offset with a static length. Technically, the drawing is copying the pixels from the pixmap to the window. The problem is Xlib doesn't allow to make pixmaps that are too big. As a result, I needed to move the buttons themselves.

The simple solution to move the buttons is simply changing the coordinates of each button. I implemented the solution quickly: it's a loop over the 1000 buttons. Every iteration of the loop processes the respective button. The processing involves calculation of the new coordinates for the button and calling XMoveWindow. It's stupid, but I wanted to see the numbers to be sure that it is stupid. It consumes 30-35% of CPU. I want less.

The processing, which runs every iteration, should run only when necessary. The obvious criteria of the necessity is visibility of the button. That says that only the visible buttons should be scrolled. Let's solve the problem then. The basic solution, I can do, is unmapping those buttons which are beyond the viewport. To explain, if a button is beyond the boundaries of the viewport, I call XUnmapWindow. If a button is in the viewport, even partially, I call XMapWindow. As a result, XMoveWindow is still called, but it costs nothing for the unmapped windows.

The CPU consumption dropped to 10-15%. It's better, but I still wasn't happy with the numbers, because it's just scrolling.

It's better to avoid recalculating the coordinates of those buttons that are unmapped. To achieve this, I need to maintain the range of visible buttons. The coordinates of the invisible buttons aren't recalculated. Also, I can stop calling XMoveWindow for unmapped windows.

Steps of the algorithm

- Make a range of the visible buttons.

- Maintain the range when scrolling happens.

- Change the offset.

- Move the visible buttons by the offset.

- If the button is out of the viewport, exclude it from the range.

- Pick the first button from those beyond the viewport, and if the viewport has space for it, move it by the offset and include it in the range.

I failed to implement the algorithm correctly the first time. Buttons didn't appear in the viewport after a couple of scrolls. What happened? My implementation of the algorithm involved having 2 stacks of buttons. One stack is above the viewport and one stack is beyond the viewport. The unexpected thing happened at the initialization of the program. At that moment, the buttons weren't in a stack. The buttons laid in a row next to each other.

When the user scrolled the window, the first "invisible" button was moved by the offset distance, but from its initial position. The idea of the fix was to place the first "invisible" button right after the last "visible" button.

Finally, the CPU load was barely above 0%.

Parsing the file with the Perf folded data

The Perf data in the folded format looks like this

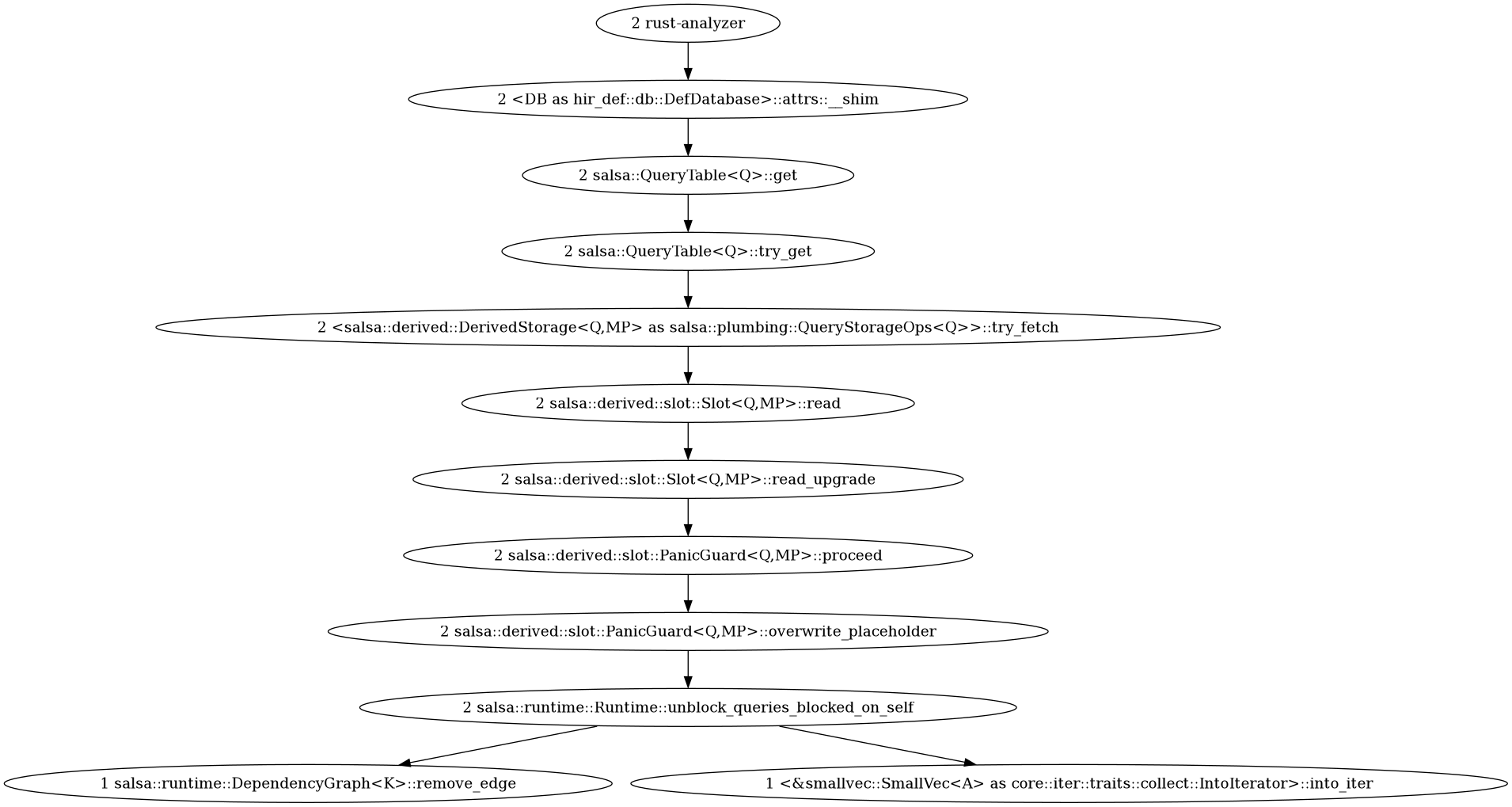

rust-analyzer;<DB as hir_def::db::DefDatabase>::attrs::__shim;salsa::QueryTable<Q>::get;salsa::QueryTable<Q>::try_get;<salsa::derived::DerivedStorage<Q,MP> as salsa::plumbing::QueryStorageOps<Q>>::try_fetch;salsa::derived::slot::Slot<Q,MP>::read;salsa::derived::slot::Slot<Q,MP>::read_upgrade;salsa::derived::slot::PanicGuard<Q,MP>::proceed;salsa::derived::slot::PanicGuard<Q,MP>::overwrite_placeholder;salsa::runtime::Runtime::unblock_queries_blocked_on_self;salsa::runtime::DependencyGraph<K>::remove_edge 1 rust-analyzer;<DB as hir_def::db::DefDatabase>::attrs::__shim;salsa::QueryTable<Q>::get;salsa::QueryTable<Q>::try_get;<salsa::derived::DerivedStorage<Q,MP> as salsa::plumbing::QueryStorageOps<Q>>::try_fetch;salsa::derived::slot::Slot<Q,MP>::read;salsa::derived::slot::Slot<Q,MP>::read_upgrade;salsa::derived::slot::PanicGuard<Q,MP>::proceed;salsa::derived::slot::PanicGuard<Q,MP>::overwrite_placeholder;salsa::runtime::Runtime::unblock_queries_blocked_on_self;salsa::runtime::DependencyGraph<K>::remove_edge;<&smallvec::SmallVec<A> as core::iter::traits::collect::IntoIterator>::into_iter 1

These lines are pretty long, but in the model, I have in my mind, they are like a tree with two leaves.

Every parent has the value of its children plus its own. Also, they have names. A simple parser should do just fine. Let's run it… it took 7 seconds to load. Why? Because I have to draw 38003 buttons instead of 1000.

More than 1000 buttons

Well, given that I need to make a lot more buttons, I should stop using windows and instead draw a few rectangles on a canvas. This means that solving the challenges with the window scrolling only served to give me more experience.

Xlib provides the function XFillRectangle to draw a rectangle. The function needs the size and the position of the rectangle.

- Size.

- Height is constant. It's clear from the picture.

- Width should be calculated. To be specific, it's a sum the

valueof a perf event plus thevalueof the direct children.

- Position.

- Abscissa (x coordinate) is the sum of the width and the abscissa of the neighbouring element on the left. The abscissa of the first rectangle is equal to the abscissa of its parent plus the

valueof the parent. - Ordinate (y coordinate) is the number of the ascendants of the rectangle multiplied by the height, which is constant.

- Abscissa (x coordinate) is the sum of the width and the abscissa of the neighbouring element on the left. The abscissa of the first rectangle is equal to the abscissa of its parent plus the

The main thing I had been scared of was handling the click and hover events (when the pointer is over a button). Both of the events require finding the button by the position of the cursor.

My initial idea was to make a KD tree, which is not a big deal for 2 dimensions. Luckily, I didn't need to implement KD tree at all. Instead of the tree, I made a simple index for the buttons, which effectively is a vector of vectors. The outer vector represents the vector of rows in the graph. An inner vector represents a row with the buttons. To find the position in the outer vector, I simply divide the ordinate by the height. The complexity of the search is just O(1). The rectangles in the inner vector should be sorted by the abscissa, allowing the button to be found using binary search. Binary search has the complexity O(log(n)). Another stroke of luck is that, in addition to the height being constant, the buttons are already sorted in the input data. This means, that building the index doesn't require any sorting.

fn find_rect<'a, 'node>( button_table: &'a [Rectangle<'node>], position_index: &[impl AsRef<[RectangleIndex]>], pos: Position, ) -> Result<&'a Rectangle<'node>, usize> { let lvl = &position_index .get((pos.1 as u32 / HEIGHT) as usize) .ok_or(0usize)?; lvl.as_ref() .binary_search_by(|((x_left, x_right), _)| { if pos.0 > *x_right { Ordering::Less } else if pos.0 < *x_left { Ordering::Greater } else { Ordering::Equal } }) .map(|x| &button_table[lvl.as_ref()[x].1]) }

It is worth mentioning that while the binary search is good on paper, but it might be bad in the computer, because the CPU processes data in cache lines. When you read an array the CPU reads ahead filling the cache line. This means, if you read an array sequentially, the next element might be in the cache. As a result, the CPU won't go to RAM to fetch that next element. On the other hand, the binary search doesn't read an array sequentially. To confirm that binary search is efficient, I did a simple benchmark. Namely, I compared binary search with sequential reading of the index. In my case, the binary search worked at the same speed, so I left the binary search.

I implemented the lookup and was happy that it worked fast enough and, of cause, correctly. The handling of clicks is not as intensive as the handling of mouse moving. As I wanted to highlight the button under the cursor, I needed to handle the intensity.

Surprisingly, the code for the clicks worked fast enough for mouse moving as well. The same lookup in the index worked just fine and doesn't consume CPU.

Hover events and highlighting

The idea involves drawing a border around a rectangle when the cursor is over it and remove the border when the cursor is beyond the rectangle. The border can be drawn with XDrawRectangle, but drawing the border was not a trick. The trick was removing it, because the picture should be restored to its state before the border has been drawn.

In order to do it, I created an additional instance of Pixmap for the window displaying the graph. The new pixmap helped to make triple buffering to render the graph. Namely, the buffers are:

- The window itself. The thing which the user sees.

- The back buffer. It accumulates the changes and flushes them into the window.

- The backup buffer. It holds the initial picture of the graph and flushes into the window when a button is no longer highlighted.

graph.restore(&ui_factory); // Restore the original picture from the backup buffer to the back buffer. if let Ok(r) = find_rect( &button_table, &position_index, (x_cursor, y_cursor + graph.offset_y), ) { graph.draw_rectangle(&ui_factory, r); graph.set_to_draw(BufferSide::Back); // Draw the picture with the highlighted rectangle to the back buffer. status_line_upper.set_text(&ui_factory, r.node.name()); }

Scrolling the new graph

The last piece is scrolling. All of my previous experiments to make scrolling working were for a bunch of windows, but now, I no longer use windows. Instead of the windows I have just a pixmap. That enabled me to implement my initial idea of scrolling. In particular, I keep track of the offset and copy the pixels from the back buffer into the window using this offset.

Expected challenges

The challenges in the section are well-known. Unfortunately, the solutions and explanations for these challenges aren't that popular - unlike a new JavaScript framework being released two weeks ago. I just want to make another place on the internet where they are discussed.

Drawing with Xlib after certain events

It's confusing when you need to write: "here is the text with this background". You create a window, define its position and the color, and you get nothing. Here is the example of the code which brings you to this situation

fn main() -> Result<(), Box<dyn std::error::Error>> { // initialization begins let mut xlib = ui::new_xlib()?; let mut display = ui::Display::try_new(&xlib)?; let screen = ui::Screen::try_new(&mut xlib, &mut display)?; let mut visual = ui::Visual::try_new(&xlib, &mut display, &screen)?; let root_win = ui::Window::new_root(&xlib, &mut display, &screen); let background = 0x1d2021; let mut main_win = ui::Window::new_main( &xlib, &mut display, &screen, &mut visual, &root_win, (0, 0, 800, 600), background, "FlameGraph Viewer", "FlameGraph Viewer", std::env::args(), )?; let mut pixmap = ui::Pixmap::new(&xlib, &mut display, &main_win); let gc = ui::GraphicContext::new(&xlib, &mut display, &main_win); let mut ui_factory = ui::Factory::new(xlib, display, screen, visual, root_win, gc); let font_data = include_bytes!("../DejaVuSansMono.ttf"); let font = Font::try_from_bytes(font_data as &[u8]).expect("error constructing a Font from bytes"); // initialization ends ui_factory.show_window(&main_win); ui_factory.set_foreground(background); ui_factory.set_background(0xffffff); ui_factory.fill_rectange(&pixmap, (main_win.width(), main_win.height())); draw_caption("RustType", &mut ui_factory, &font, &pixmap, 0xffffff, background); let mut quit = false; while !quit { let event = ui_factory.wait_event(); match event { ui::Event::Expose(_) => (), ui::Event::KeyPress(KEY_Q) => quit = true, ui::Event::KeyPress(key) => println!("key code {:x}", key), ui::Event::ConfigureNotify { width, height } => { main_win.set_width(width as _); main_win.set_height(height as _); pixmap = ui_factory.resize_pixmap(pixmap, &main_win); } }; } Ok(()) }

In Xlib, you don't make UI in the declarative way. Instead, you keep track of the events in the window and redraw it as needed. In this case, the solution is to draw the text only when the Expose event occurs.

fn main() -> Result<(), Box<dyn std::error::Error>> { // initialization begins let mut xlib = ui::new_xlib()?; let mut display = ui::Display::try_new(&xlib)?; let screen = ui::Screen::try_new(&mut xlib, &mut display)?; let mut visual = ui::Visual::try_new(&xlib, &mut display, &screen)?; let root_win = ui::Window::new_root(&xlib, &mut display, &screen); let background = 0x1d2021; let mut main_win = ui::Window::new_main( &xlib, &mut display, &screen, &mut visual, &root_win, (0, 0, 800, 600), background, "FlameGraph Viewer", "FlameGraph Viewer", std::env::args(), )?; let mut pixmap = ui::Pixmap::new(&xlib, &mut display, &main_win); let gc = ui::GraphicContext::new(&xlib, &mut display, &main_win); let mut ui_factory = ui::Factory::new(xlib, display, screen, visual, root_win, gc); let font_data = include_bytes!("../DejaVuSansMono.ttf"); let font = Font::try_from_bytes(font_data as &[u8]).expect("error constructing a Font from bytes"); // initialization ends ui_factory.show_window(&main_win); let mut quit = false; while !quit { let mut is_redraw = false; let event = ui_factory.wait_event(); // we reset the background of the pixmap every event, but the actual drawing happens if the // flat is_redraw is raised. ui_factory.set_foreground(background); ui_factory.set_background(0xffffff); ui_factory.fill_rectange(&pixmap, (main_win.width(), main_win.height())); match event { // here we draw the caption. ui::Event::Expose(0) => { draw_caption("RustType", &mut ui_factory, &font, &pixmap, 0xffffff, background); println!("expose redraw"); is_redraw = true; } // the rest of the events aren't changed. ui::Event::Expose(_) => (), ui::Event::KeyPress(KEY_Q) => quit = true, ui::Event::KeyPress(key) => println!("key code {:x}", key), ui::Event::ConfigureNotify { width, height } => { main_win.set_width(width as _); main_win.set_height(height as _); pixmap = ui_factory.resize_pixmap(pixmap, &main_win); } }; if is_redraw { println!("swap buffers"); ui_factory.swap_buffer(&main_win, &pixmap); } } Ok(()) }

It's annoying at first, but as I approached the implementation of the search feature in the application, I clearly understood the possible states of the application from the code.

- The search should be invalidated after one of the buttons is clicked.

- The keys

nandNshould work only after the search program has successfully finished. - If the search program finishes unsuccessfully, it shouldn't affect the current active search.

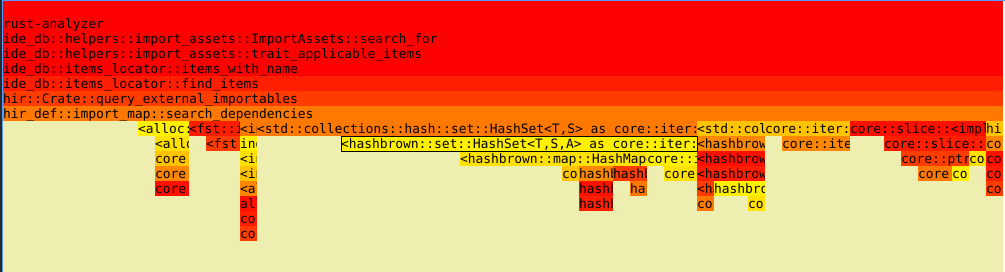

Render only the visible text

Rendering text for every rectangle doesn't really work in this case, because the rectangles are short, while the text is long. As a result, the text goes beyond the rectangle and sets on top of the text of neighboring rectangle. It makes such a mess in which you can't read the names of the rectangles. Luckily, when you draw text at this level, you control every pixel. In order to prevent the mess, I needed to trim that text which goes beyond its button. There was one more reason for the trimming - the drawing of names of the perf events took a couple of minutes. The API of rusttype allows me to get the information of every glyph (in our case it means a character). It allows me to get the coordinates of the pixels of every glyph and draw them on the canvas. As I know the coordinates of the pixels I can omit those ones which are beyond the rectangles.

let scale = Scale::uniform(font_size); let v_metrics = font.v_metrics(scale); let offset = point(0.0, v_metrics.ascent); let glyphs: Vec<_> = font.layout(content.as_ref(), scale, offset).collect(); glyphs.iter().for_each(|g| { if let Some(bb) = g.pixel_bounding_box() { g.draw(|x, y, v| { let color = color_blend(foreground, background, v); let x = x as i32 + bb.min.x + start_at.0; let y = y as i32 + bb.min.y + start_at.1; if ((x - start_at.0) as u32) < width_limit { canvas.draw_point(color, x, y); } }) } });

Once I've drawn the text, I realized that it doesn't make sense to draw text on a very small button, as the first 2-3 letters are unlikely to convey any meaningful idea about the name of the button's perf event. Just one simple condition solves the problem.

button_table.into_iter().for_each(|rect| {

rect.draw(ui_factory, scrollable_pixmap);

if rect.width > 10 {

draw_caption(

rect.node.name(),

&ButtonDrawer {

ui_factory,

pixmap: scrollable_pixmap,

},

font,

FONT_SIZE,

TEXT_COLOR,

rect.color,

rect.pos,

rect.width,

);

}

});

Segmentation fault is not a big deal

One function of Flame Graph Viewer is zooming to a certain frame. If you zoom to a certain frame, you get the traceback to the frame. The traceback consists of all of the parents of the frame. In order to find them, I need to hold a reference to the parent in every frame. So far, Rust doesn't allow the creation of self-referential structures with a regular pointer. If it had allowed, the code would have looked like this:

struct Node<'self> { parent: &'self Node }

In short, it's impossible because the Rust compiler can't determine how long the self reference should live. Details are here.

There are a couple of popular options to deal with it:

- Rc or his brother Arc. This way you can get a memory leak if you forget to clean references.

- An unsafe option is raw pointer. Using this method, you have to deal with dangling pointers and null pointers. In other words, there are three cases:

- It points to an inaccessible memory block. The address 0x0 for example.

- It points the memory block which is freed, but the type of the data matches the type of the pointer.

- It points to the block of memory is freed and filled with some data of the type which doesn't match the type of the pointer.

- Use one of the crates which allows you to create a self-referential structure. Of course, each one has its pros and cons.

- Maintain a vector of objects and keep the index to the parent.

In my case the tree is immutable. The parent of a node lives as long as the tree. Once a parent is created, all of the pointers from its children are valid. This means I will not come across the problems mentioned in point 3. With this in mind, I decided to use raw pointers.

So the structure of node is

struct Node { parent: Option<NonNull<Node>>, // the pointer to the parent children: Vec<Node>, // the children nodes name: String, time: Range<u64>, }

With absolute confidence, I used this structure. Unfortunately, it failed me when I implemented the handlers for the button clicks. Namely, once a button is clicked, the tree is rebuilt, and I see the subtree. The root of the subtree is the button I've pressed. It wasn't even clear in the beginning that I had issues with the memory. Later I found that clicking the same button gives a different subtree. It's definitely a bug.

I realized that the problem is related to the fact that vectors of children move in the memory if they don't have enough space to extend. An extension is needed when you push items into a vector. In this case, the extension involves a process called reallocation. A new space is allocated for the vector. That new space is large enough for the vector to extend. The vector data is copied to the new space in the memory and the capacity of the vector is increased.

The old space of the vector still holds the old data, but the space is marked as unallocated and can be reused. Eventually, the pointer points to the data at the old space. Dereferencing the pointer leads to these 3 situations:

- Segmentation fault if the old space remains unallocated.

- Glitches if the old space is allocated. In my case the old space is reused for the new nodes. I pressed on the parent node, but end up at some unexpected place of the graph.

- Glitches again if the old space is allocated, but the type of the data is different. The pointer is dereferenced, and the data is cast to the type the pointer expects, rather than data which is actually there. It didn't happen in my case, but it's possible.

In this animation, I show two arrows (-1- and -2-) which point to their parents. Then their parents are moved, and the arrows point to an unallocated space. Then the space is reused for the vector of the children of the new Parent 3. The arrow -1- points to the first child (n1p3) of the Parent 3, which means that the child of the Parent 1 thinks that n1p3 is its parent, which is absurd.

I decided to make vectors with a big capacity to prevent the reallocations. It didn't help. I was surprised, but sure that the problem is related to reallocation. I removed the setting of the capacity and made vectors of boxes. Thus, the parent pointer points not to an element of the vector, but to the chunk of memory allocated for the box. Once a vector is moved, the addresses of the boxes are changed, but not the addresses of the nodes in the boxes.

And eventually the code of the structure looks like this

struct Node { parent: Option<NonNull<Node>>, // points to the data on the heap allocated by Box children: Vec<Box<Node>>, // the children nodes name: String, time: Range<u64>, }

Actually, Rust has a container, named Pin, designed for these kinds of situations. It's designed to prevent references to those objects which move around the stack. The moving implies that when you pass an object as an argument to a function, it has a different address. As a result, the parent of a node in a tree should be on the heap. It was hard for me to understand. I got it only when I found how function calling is generally implemented in assembly.

The detail explanation of Pin I understood came from this video. In short the value in the container must satisfy the trait Unpin.

impl<P> Pin<P> where P: Deref, <P as Deref>::Target: Unpin

A better data type would be children: Vec<Pin<Box<Node>>>. I avoided it for now, as I wanted to completely avoid these double references.

Search

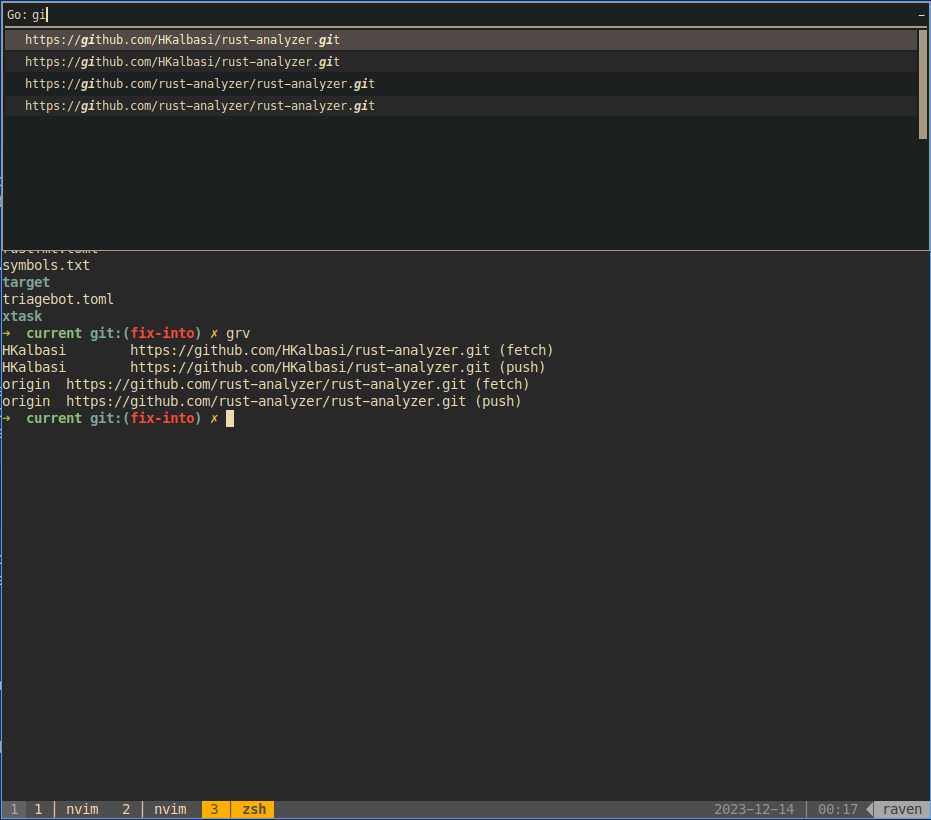

I really missed having a proper search in flamegraphs. Sometimes I preferred to inspect perf events in plain text format instead of the flamegraph. It allows me to use the tools like grep, fzf, sed, ogrep to manipulate the text representation of the perf events and look and examine the hot spots in the program from different angles.

In order to implement a search box, you have to address a few aspects apart from text drawing.

- Selection

- Deletion

- Copying

- Pasting

- Jumping of the caret

I checked the code of ST in order to understand how much work needs to be done. At that moment, I wished I had used some GUI toolkit. Apart from those things, I had to implement the actual search, and I wanted to have the search be fuzzy. This reminded me that I had embedded Rofi into my ST to search for links in the terminal output.

I didn't want to use Rofi for this project. Rofi is good, but it's not as fast as Dmenu. I did a little test with it and my 38003 records were loaded momentarily. Moreover, the search results updated without any delay after I pressed a button. I needed to embed it into my application. X11 allows to set a window of one application to be a parent for a window of another application. Dmenu exposes the functionality, so you don't need to change the source code for it. In order to embed Dmenu into your application, you have to:

- Get the ID of the window of your application. You get it when you call

XCreateWindow. - Invoke Dmenu with the flag

-wwhere you pass the ID.

I added a search box which makes the actual fuzzy search. The default behavior didn't meet my needs. It returned only the picked record. In order to make it usable for me, I added a couple of patches:

- History. Just to pop up the previous search.

- Numbers. This will return the number of the results. It allows me to relate the search results to the buttons in the graph.

- Multiple selection. In case I want to check a couple of buttons with the same name.

- Case-insensitive. The patch allows setting whether the search is case-sensitive or not. Given the case in a symbol name matters, I've added it.

Also, I implemented a feature in Dmenu to select all the results. So:

- Press Enter to add the selected entry to the results and close the window.

- Press Ctrl-Enter to add the current entry to the results.

- Press Alt-Enter to add all of the filtered entries to the results.

Conclusion

What's the bottom line? I got a tool I had needed for a long time. I learnt a few lessons. I demystified a quite popular dogma. I had fun.

The dogma

We don't need to care about the memory and CPU, because we have a lot.

It's fair from one side, but it went too far. The developers started thinking that only one application runs on a user's computer - the application which they create. Let's make everything in a browser, because it has everything. The example of this particular application is not that representative, because the author of FlameGraph created it for himself and wanted to solve his problem. Unintentionally, FlameGraph solved problems of a lot of programmers and system engineers. It helped me quite a few times. This time it failed because of the limits of a browser.

Nowadays we say something like: "We have a lot of memory and CPU, so we don't need to care about it", and after we make a browser application instead of a native client. Inspecting flamegraphs in a browser doesn't work with the amount of Perf Events generated for a more or less big project. I have 16 threads and 64GB RAM. Did it help me? Any browser takes ages to load the generated SVG along with its JS. After loading, it just can't show me a subgraph when I click something.

My solution has two dependencies. I haven't done any optimizations. I just took enough to solve the problem. We should start asking the question: "Do we really need all of this to make a program?". Yes, modern computers have a lot of memory, but they also run many programs besides the one you're developing.

Lessons

- Vector doesn’t guarantee that its data remains at a specific address, even without reallocations. You can't be sure that a raw pointer points to a valid data from a vector. The issue might be specific for Rust. That remains an area to research. The experience showed that a vector of pointers can be safe for raw pointers. And the raw pointers should point to the data which is pointed at by those pointers from that vector.

- There are some X11 applications which can be reused. I'm not sure if it's possible in Wayland. But this seems to be a very neat feature. We do it constantly in websites. We embed audio/video players, calendars, chats (a chat with a consultant), advertisements, VoIP widgets, commercials. We get a script and invoke a command or two from the script. A similar approach was taken with Dmenu - I set a command to invoke it.

- Throwing out extra stuff does not imply harder implementation. Here is where the marketing comes. The total amount of code with tests and comments is 1710 lines. The original implementation of FlameGraph in Perl is 1161 lines. Yes, I wrote more code, but it's not that much if you take into the account these things:

- The speed of the application, which allows to inspect 1000 times bigger flame graphs.

- The fuzzy search. What was possible only if you inspected the text report instead of the graphics.

- Some tests. The original solution has none.

- Defined types to make relations in code explicit.

- The application doesn't require such a big application like a browser.

Fun

It was a really interesting experience. Apart from achieving the program I wanted, I solved a couple of problems that I don’t usually encounter at work:

- I implemented scrolling that doesn’t consume much CPU. Eventually I threw it away, but it was interesting to understand the underlying difficulties.

- Handling clicks by myself was overwhelming, but it was a relief to discover that a simple data structure solved most of the problem. In addition, I was really happy to see the synergy when the same data structure also solved the problem of hover events.

- Dealing with visual glitches. It was scary to see the first blinking of the application. It's not like fixing a bug in transferring data from the database to the frontend and back. You can examine the data, because it's human-readable. With graphics, what are you going to check? The numbers of the matrix of the result picture? I sat down and thought through the process, made a picture of the transitions in the state machine, considered how the canvas is drawn. After that, finding one extra flushing of the buffer fixed the glitch was easy. It made me feel great.

- Finding a segmentation fault was challenging when I was a university student. But after reading a few books, I stopped being scared of these kinds of things. Understanding the underlying processes behind containers and the OS API helped to find the bug fast. But to be fair, I took a break and solved the problem the next day.

Plans

I have a few things I want to see

- Proper resizing without reloading.

- Automated tests of the user interface.

- Usage documentation.

- Some continuous integration would be really nice to have, to run the tests and build the package.

- Create a package for some of the top Linux distributions, or at least for Nix.

- The condition of drawing the text in small rectangles should consider DPI.